This week Alex Hayes asked me to sit in on an Advertising Week APAC panel on the promise of technology. I know Alex from his Mumbrella days, a few years back he set up Clear Hayes Consulting – “marketing to marketers” – and he’s built a successful and expanding business from scratch, something I find admirable and kind of miraculous.

I prepped for the panel, as I always do, by writing a series of notes on subjects Alex wanted to cover.

Question: What’s the opportunity technology provides to make the media and marketing industry and the overall internet better for people?

We have in our minds the idea that somehow we are in a transitional time. That there will be a moment some time in the future when we will have arrived, the turmoil will be over, and the promise of technology is finally realised.

But this will never happen.

There will be no moment when the technology transition is complete. Promise turns to reality and reality turns out to be flawed and impermanent. The Roman emperor Marcus Aurelius wrote “the universe is change” in his diary many centuries ago, it’s still true, and it will remain true for as long as we’re around.

For me it means the big opportunities focus on people and not technology.

Many opportunities hinge on training, collaboration and cooperation.

I love the idea of education and progression for everyone, not just the people who are known as students. People who are learning and progressing exude a sense of excitement and energy. This is good for companies and organisations.

Initiatives such as the Digital News Academy are great for this reason. This is a hook-up between News Corp, Google and the University of Melbourne, to train 250 journalists in aspects of modern reporting, like data journalism. It’s running right now.

There is a programme currently running at the BBC called Local News Partnerships. It has a unit called the Shared Data Unit, the SDU, and this is really brilliant. I have had the opportunity to sit in on some of their sessions. They train journalists in small commercial newsrooms how to do data journalism, using real examples, and then provide the output to the BBC and all partners for publication. As an example, they investigated the rise of illegal rubbish dumping, and the rise of online casinos during lockdown.

These sorts of initiatives need not be restricted to news media.

What are the technologies that have you excited and how do you see them changing the way we do business in the next five years?

At the moment there is a huge opportunity around AI language and image models. These generative AIs, with billions or even trillions of parameters, are trained on petabytes of words of text or images. The most famous language model is GPT-3, it won’t be long before GPT-4 comes out, and there are plenty of competitive models.

I have used GPT-3 extensively.

GPT-3 is already amazing and it’s only going to become more amazing. It can write computer code, it can generate poetry, write short university essays. It can mimic just about any writing style.

It is not a useful tool for journalism, because it doesn’t care a jot about truth.

It will happily tell you that the kangaroo is native to New Zealand. It tells lies with dead-pan confidence.

What GPT-3 and these other models are good for is acting as an accelerant to creativity. And this is right now. This is not, “let’s wait and see”. I recommend you get a login and start playing with these engines.

Similarly with image generators. The most famous of these is DALL-E, but there is another called MidJourney currently in open alpha that I have played around with.

I can tell you now that magazine illustrators are out of a job.

When I say these are “accelerants to creativity”, what I mean is that they are currently adjuncts to the creative process and should be used that way. For example, you might know that your business needs a rebrand. Or you might be trying to think of a certain segment of your audience or customer base. You want to picture that, go to MidJourney or Dall-E and ask it to paint you the picture. It will come back with 4 options in less than a minute. These probably won’t be perfect, but they will get you thinking. There will be something to see, for your mind to get working on.

There are big opportunities around using the engines as they exist right now in combination.

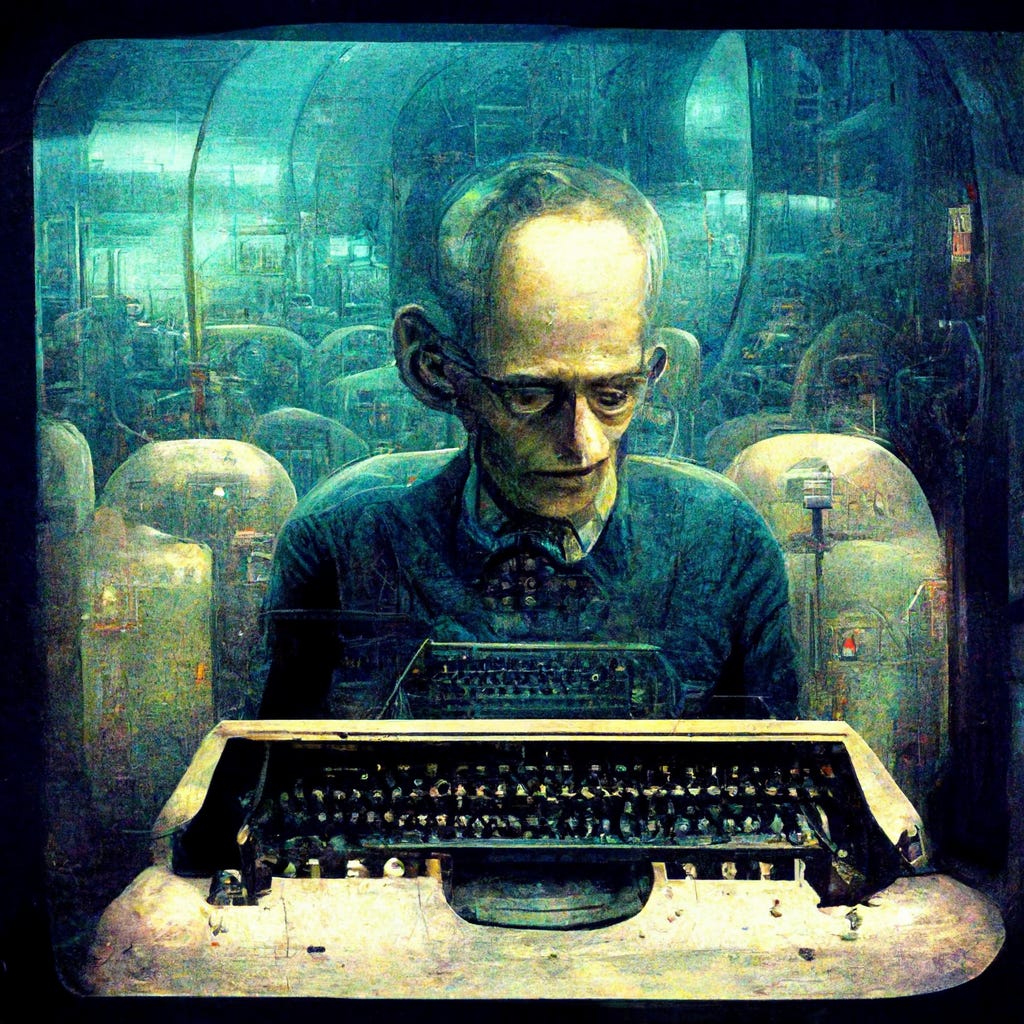

I want to show you some examples. The first two are images MidJourney generated after I supplied the text prompts:

“a writer sitting inside an enormous machine that is actually smarter than him”

“journalist Alex Hayes hosting a panel discussion while intoxicated in the style of Dali”

It becomes even more interesting when you co-opt GPT-3 to provide the input to MidJourney. These are the exact words I put into GPT-3:

“Describe a move poster”

It came back with this:

“The movie poster for A Silent Voice features a close-up of the film’s protagonist, Shoko Nishimiya, against a light blue background. She is shown from the shoulders up, looking directly at the viewer with a slight smile. Long black hair, braids and she wears a school uniform. The movie title is written in white block letters above her head, director Naoko Yamada, is written in small print below.”

I cut and paste this into MidJourney (adding a one-word instruction to make it movie-poster proportioned), whereupon the AI produced the following image as one of four options:

Next I asked GPT-3 to describe an “Australian science fiction movie poster” and the same process yielded this nightmarish scene:

A final example shows how flexible the system can be creatively. First I asked GPT-3 to come up with five names for a “premium media consulting business”. Result:

1. Parnassus Media 2. Mnemosyne Media 3. Titan Media 4. Gaea Media 5. Demeter Media

I chose Demeter Media, and asked GPT-3 to describe the logo for the business. Result:

The logo for Demeter Media is a simple golden yellow sun with a brownish orange sunburst emanating from the center. The word “Demeter” is written in simple black lettering across the center of the sun.

I input this into MidJourney for 4 options. Result:

You will see that MidJourney didn’t nail the brief, for either the movie poster or the logo, and it doesn’t know how to spell, write, or follow clear instructions about type. But we’re not after a finished product here. Instead we’re looking at a human-led editorial process that creates stepping off points. To generate all the above examples was the work of 15 minutes. Each image is entirely original, and if you repeat the process you get different results.

Post-script: Understanding is a creative act

To create meaning, you have to have both a speaker and a listener, a transmitter and a receiver.

Perhaps AI generators are part of a bigger trend that we will have to get our minds around: de-emphasising the creators and emphasising the “understander” (reader, viewer, listener, consumer). I don’t doubt that within a couple of decades, AIs will be able to generate compelling stories and then execute those stories in any medium. This will require a shift in the way we think and attribute value. In this world, more emphasis falls on the act of understanding than on the traditionally high-status acts of writing, speaking, and inventing.