“The machines have acquired language. The ability to express ourselves in complex prose has always been one of our defining magic tricks as a species … we have crossed an important threshhold.”

This is a quote taken from a well-researched article in the New York Times, not science fiction.

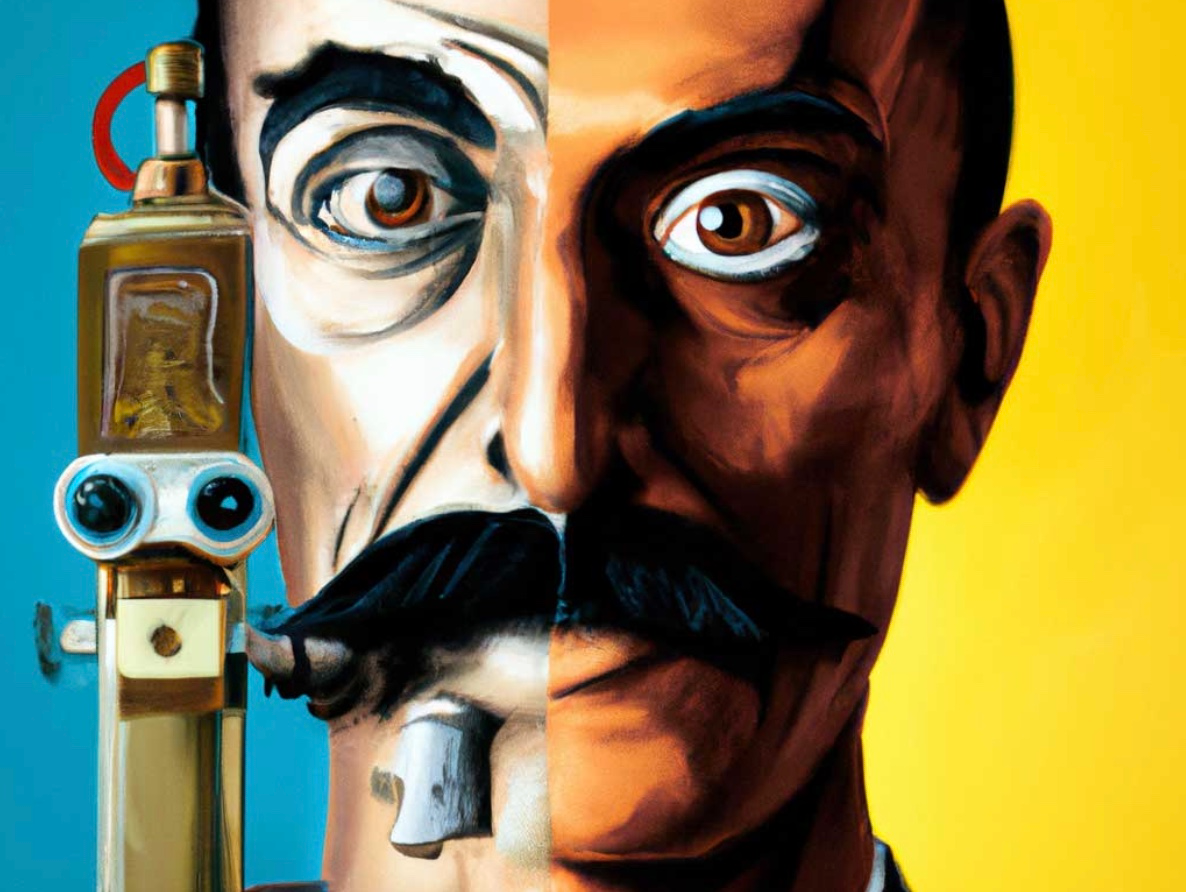

At the beginning of the year I asked “Where will AI lead us?” in terms of the ability to generate convincing stories from scratch. I think we may find out the answer to that question sooner than I expected. I have been playing with the public-release version of the DaVinci GPT-3 model – the current state-of-the-art in language-generation AI – and can verify that the citadel of linguistic prowess has been breached and will soon be taken. Skills central to the craft of news, long held dear by editors, will soon be automated. It turns out that writing well isn’t some kind of magical process, but succumbs to computational assault in the same way that hand-weaving succumbed to the power loom.

Much has been written about GPT-3 since it was built by Silicon Valley’s OpenAI in 2019. Knowing that the name stands for Generative Pre-trained Transformer doesn’t help to understand it. The New York Times piece does a great job describing the way GPT-3 was built by a machine playing a game with itself, trying to guess the next word in a sentence. The sentences it used for training were taken from vast trove of English available online.

Networks using the network

In the early 90s, before I escaped university for the newsroom, I studied neural networks. They were the next big thing. The networks I was studying weren’t quite right though, and they spent long years in the tech wilderness before being re-invented and reborn in iterative learning networks like GPT-3. The thing about these networks is how brutally persistent they are. Once you make a system that inches the smallest amount towards success, all you have to do is iterate. Use more computational power, grab a bigger data set, run the algorithm longer. Change something and do it again, ad nauseam. The biggest challenge is knowing what success looks like and how to measure it. GPT-3 defined success as conforming to the way English has been used in several billion words of books, reports, websites, social media and written conversation.

If you think this amounts to no more than clever mimicry, you might be both underestimating mimicry and overestimating what humans do when they use language.

Consider the following poem. I fed the first four and half lines (up to “a pair of eyes”) into GPT-3, and let it do it’s thing. The first line (which I wrote) is a rip-off of a WH Auden poem. I wanted to test whether the machine could rhyme. I found the result unsettling.

Walking out one evening I woke up in my bed The light running through the blind Stuck a beam across my head A pair of eyes looked out of mine And watched me as I lay I wondered if I was asleep Or if I'd died that day The eyes were not my own But I felt no fear The body that they looked from Was lying very near The eyes just stared and stared And I knew they wanted me I rose up from my bed And walked across to see The body on the floor Was lying very still The eyes were staring up at me But the body had no will

So the machine can rhyme. It can also paint nightmarish scenes. Let me be clear: this poem did not exist before I prompted the computer to write it. Cut and paste the words into Google and you won’t find a match.

You could say the poem doesn’t mean anything, having been composed by a mindless network, but that doesn’t stop me reading meaning into the words. It is very easy to imagine a future GPT that has been trained in the conventions of long-form storytelling, and has no problem churning out novels in the style of Dan Brown, Lee Childs or Agatha Christie. Given that one of the overriding human motivations is to find out “what happened”, there’s no reason to think these books would not be enjoyable to read.

The shift

For writing and editing as a profession, GPT-3 and its successors represent a revolution. There has been a lot of debate about just how good the model is. GPT-3 often demonstrates a kind of mad confidence when it is way off beam (it told my mate Dave that kangaroos are native to New Zealand). Physicists have criticised it because it can’t do acceleration calculations and humanities professors want to shut it down because it demonstrates bias. I find the objections beside the point, as though an alien landed on earth and someone protested that it was stupid. The machine is saying things. The very fact that we are engaging with the meaning of those words is enough. And it can already write better English than many journalists.

Here’s a list of editing jobs the machines can already do in controlled environments:

- Correct grammar, spelling, and adherence to house style

- Re-write convoluted or jargon-filled paragraphs

- Summarise in straightforward language

- Determine whether sentences are written well

- Compose within story templates (straight news, features, sport etc) given a set of facts

None of this is amazing to us anymore.

The “higher order” editorial functions – such as probing stories to find holes or trying to make a boring story interesting – are highly dependent on subject area expertise, local knowledge, and an understanding of human psychology. You might think such things will never succumb to the network, but I’m confident now that they will. It’s not necessary for the machines to get it right every time. All they have to do is ask a useful question, just like good editors do.

For those of us who have built a career on the ability to string words together, GPT-3 and its successors require a significant mental shift.

There will also be cultural changes as unintelligible or difficult texts succumb to machine summary. Consider how specialist areas of language – scientific papers, legal cases, medical studies, academic journals – will be laid bare. In these cases, language not only communicates but also serves as a gatekeeper to keep the uninitiated out of high-status realms. There will be great resentment and turmoil.

A new oracle

For the past few days when I sit down at my desk, I have consulted GPT-3. It is very natural for me to regard the machine as a kind of oracle. Its inner workings are known to no one, not even its creators. I type in a few sentences (in italics) and ask for its utterance.

“Hal woke up, ate an orange, went for a run, and then sat down to work. The first thing he did was to write a story that he’d been thinking about for a while. That took a couple of hours, and that set him up for the rest of the day.”

This is mundane and brief. But it’s also grammatically correct and meaningful, and it turned out to be exactly what I had to do.